Huffman coding

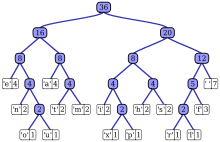

Huffman coding is a way of encoding data. The method was developed in 1952, by David A. Huffman, at MIT. It was first published as A Method for the Construction of Minimum-Redundancy Codes, in 1952.[1] The method is a prefix code, and a way of lossless data compression. The idea behind it is very simple: There's a dictionary that contains words, and how often they occur in the text. Each word is replaced by a variable-length code. Words that occur more often get shorter codes. The codes must not overlap and be unique.

| Char | Freq | Code |

|---|---|---|

| space | 7 | 111 |

| a | 4 | 010 |

| e | 4 | 000 |

| f | 3 | 1101 |

| h | 2 | 1010 |

| i | 2 | 1000 |

| m | 2 | 0111 |

| n | 2 | 0010 |

| s | 2 | 1011 |

| t | 2 | 0110 |

| l | 1 | 11001 |

| o | 1 | 00110 |

| p | 1 | 10011 |

| r | 1 | 11000 |

| u | 1 | 00111 |

| x | 1 | 10010 |

The output from Huffman's algorithm is a variable-length code table for encoding a source symbol (such as a character in a file). The algorithm constructs this table based on the estimated probability or frequency of occurrence (weight) for each possible value of the source symbol. As in other entropy encoding methods, more common symbols are generally represented using fewer bits than less common symbols. Huffman's method can be efficiently implemented, finding a code in time linear to the number of input weights if these weights are sorted.[2] Even though Huffman's method is optimal among methods encoding symbols separately, it is not always optimal among all compression methods - it is replaced with arithmetic coding[3] or asymmetric numeral systems[4] if a better compression ratio is required.

History

changeIn 1951, David A. Huffman and his MIT information theory classmates were given the choice of a term paper or a final exam. The professor, Robert M. Fano, assigned a term paper on the problem of finding the most efficient binary code. Huffman, unable to prove any codes were the most efficient, was about to give up and start studying for the final when he hit upon the idea of using a frequency-sorted binary tree and quickly proved this method the most efficient.[5]

In doing so, Huffman outdid Fano, who had worked with Claude Shannon to develop a similar code. Building the tree from the bottom up guaranteed optimality, unlike the top-down approach of Shannon–Fano coding.

References

change- ↑ D. A. Huffman (1952). "A method for the construction of minimum-redundancy codes" (PDF). Proceedings of the I.R.E.: 1098–1101.

{{cite journal}}: Unknown parameter|month=ignored (help) - ↑ Van Leeuwen, Jan (1976). "On the construction of Huffman trees" (PDF). ICALP: 382–410. Retrieved 20 February 2014.

- ↑ Ze-Nian Li; Mark S. Drew; Jiangchuan Liu (9 April 2014). Fundamentals of Multimedia. Springer Science & Business Media. ISBN 978-3-319-05290-8.

- ↑ J. Duda, K. Tahboub, N. J. Gadil, E. J. Delp, The use of asymmetric numeral systems as an accurate replacement for Huffman coding, Picture Coding Symposium, 2015.

- ↑ Huffman, Ken (1991). "Profile: David A. Huffman: Encoding the "Neatness" of Ones and Zeroes". Scientific American: 54–58.